We present a neural human modeling and rendering scheme compatible with conventional mesh-based production workflow. Based on dense multi-view input, our approach enables efficient and high-quality reconstruction, compression, and rendering of human performances. It supports 4D photo-real content playback for various immersive experiences of human performances in virtual and augmented reality.

Abstract

We have recently seen tremendous progress in the neural advances for photo-real human modeling and rendering. However, it's still challenging to integrate them into an existing mesh-based pipeline for downstream applications. In this paper, we present a comprehensive neural approach for high-quality reconstruction, compression, and rendering of human performances from dense multi-view videos. Our core intuition is to bridge the traditional animated mesh workflow with a new class of highly efficient neural techniques. We first introduce a neural surface reconstructor for high-quality surface generation in minutes. It marries the implicit volumetric rendering of the truncated signed distance field (TSDF) with multi-resolution hash encoding. We further propose a hybrid neural tracker to generate animated meshes, which combines explicit non-rigid tracking with implicit dynamic deformation in a self-supervised framework. The former provides the coarse warping back into the canonical space, while the latter implicit one further predicts the displacements using the 4D hash encoding as in our reconstructor. Then, we discuss the rendering schemes using the obtained animated meshes, ranging from dynamic texturing to lumigraph rendering under various bandwidth settings. To strike an intricate balance between quality and bandwidth, we propose a hierarchical solution by first rendering 6 virtual views covering the performer and then conducting occlusion-aware neural texture blending. We demonstrate the efficacy of our approach in a variety of mesh-based applications and photo-realistic free-view experiences on various platforms, i.e., inserting virtual human performances into real environments through mobile AR or immersively watching talent shows with VR headsets.

Video

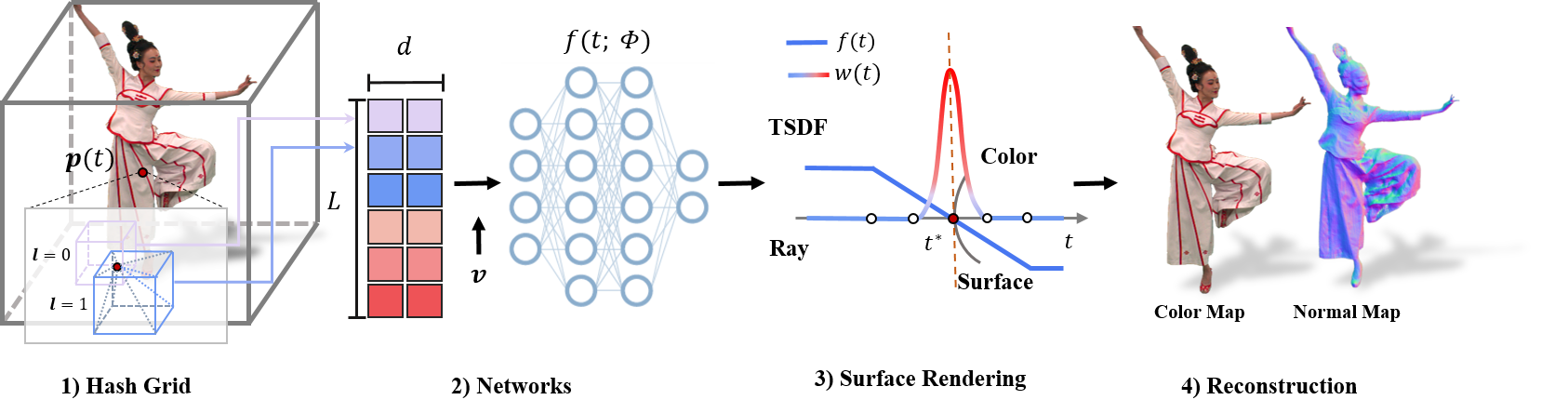

Fast Surface Reconstruction

The illustration of our fast surface reconstruction pipeline. (1) for a 3D input position x, we first find L surrounding voxels in a 3D multiresolution hash grid and compute d dimension feature with trilinear interpolation of eight corners for each voxel. (2) network takes the generated feature and view direction as inputs, and outputs SDF value and color. (3) utilizes SDF value to compute the weight for blending color of each 3D input position x in a ray, and then generates the final pixel color for photo-metric loss in (4).

PyTorch Implementation of NSR

CUDA Implementation of NSR

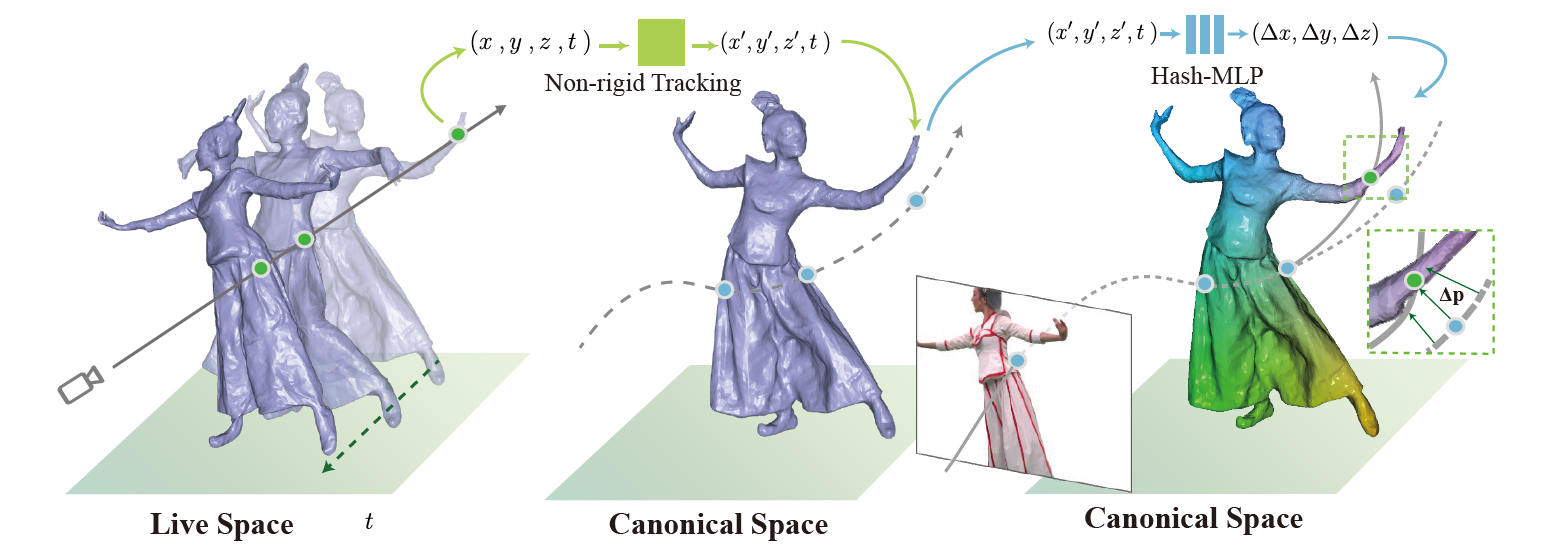

Hybrid Human Motion Tracking

The illustration of backward warping pipeline. We utilize two stages strategy to perform our neural tracking. In coarse stage Sec 4.1, we transform points in live space back to the canonical via the traditional non-rigid-tracking. In fine-tuning stage Sec 4.2, our deform net based on hashing encoding learns the residual displacement.

Results of Neural Tracking

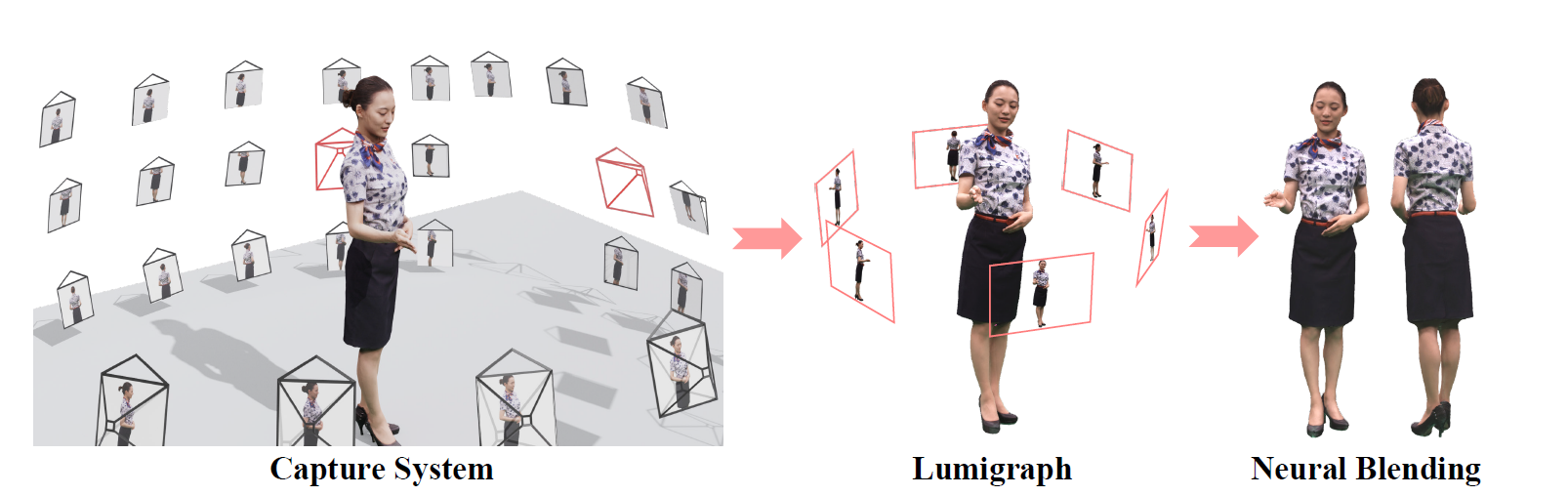

Neural Texture Blending

The pipeline of neural texture blending. The raw images obtained from black cameras in the capture system are fed into lumigraph rendering system. We pick six novel views (red camera in the capture system) as the output views of lumigraph. These output views, together with the corresponding depth images, are sent into the neural blending module to generate novel view images.

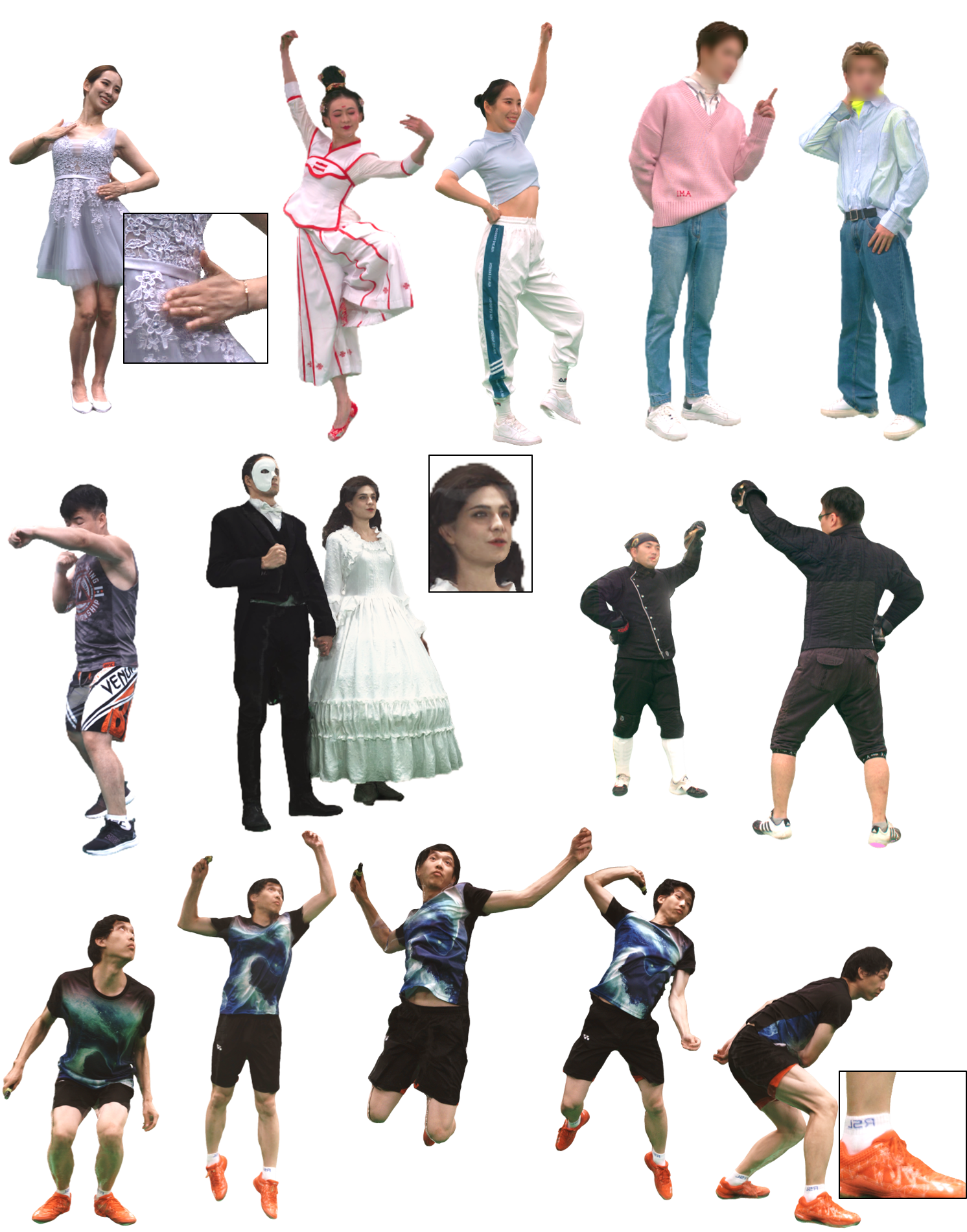

Gallery of Neural Texture Blending